The half year update: Check out what we've added to hale»connect this year!

2021 has been an eventful year, and we've had many exciting developments including further support for the increasingly popular GeoPackage format, more CSW capabilities, and a host of other improvements.

Here's what's new:

Information for Users

New Features

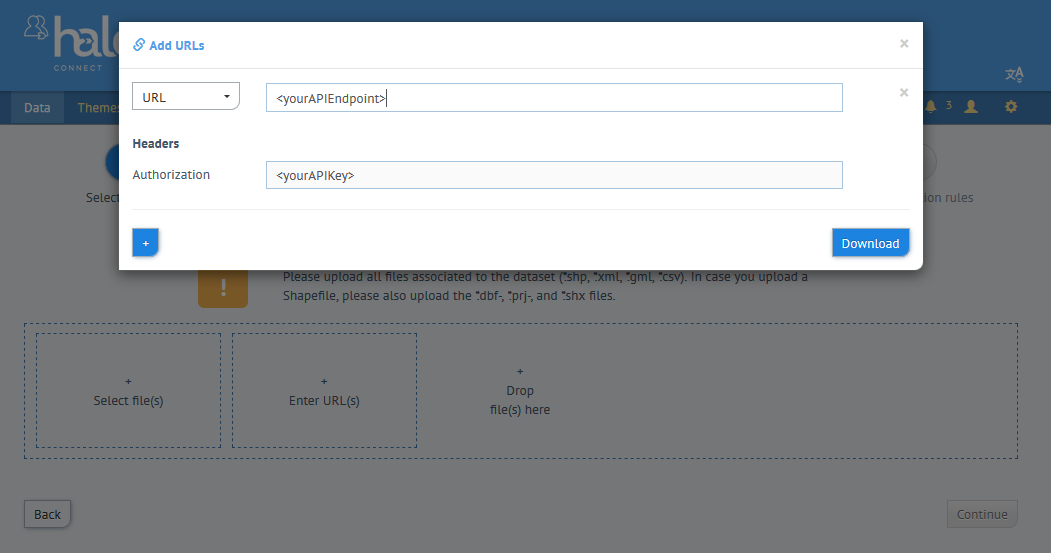

- Users uploading data to the hale»connect platform via URL can now add Authorization headers to HTTP(s) requests to provide the required authentication, as shown below.

- Organisations that have their own CSW configured can now edit values in the CSW capabilities documents through use of variables on the organization profile page.

Note: Activation of theCSW_INSPIRE_METADATA_CONFIGfeature toggle is required. - Organisation can now be filtered in the text filter of the dataset resource list.

- hale»connect now supports GeoPackage as source data input to online transformations via URL.

- Service publishing is now enabled with custom SLDs that include multiple feature types in one layer.

Changes

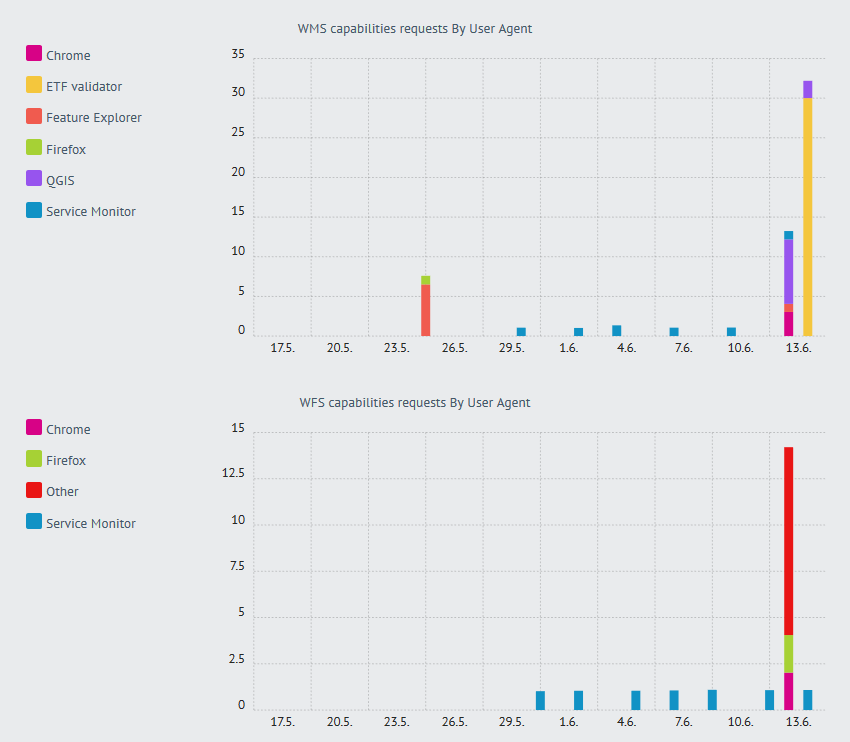

- Usage statistics graphs now use the same color for the same user agent across multiple graphs, as shown below.

- Metadata input fields that allow you to select predefined values as well as enter free text, now save the free text when you exit the field (and not just after entering "Enter").

- In the WMS service settings, there is now the option of restricting rendering in view services to the bounding box in the metadata. Whether the option is activated by default depends on the system configuration. If the option is activated, data may not be displayed in a view service if the bounding box in the metadata is incorrect or the axes are swapped.

Note: Currently, this setting for data set series cannot be adjusted via the user interface. - Data fields that are interpreted as a number in the file analysis are no longer treated as a floating point number if values are all integers.

- To improve overall performance, the system-wide display level configuration for raster layers is now checked earlier.

- Terms of use / useContraints in the metadata: Descriptions for given code list values can now be adapted.

- Use restrictions / useLimitations in the metadata: GDI-DE-specific rules only apply if the country specified in the metadata is Germany. If this is not specified, the GDI-DE standard value is no longer set if the data set is an INSPIRE data set ("INSPIRE" Category) so that INSPIRE Monitoring correctly recognizes the metadata as being in accordance with TG 2.0.

- Outdated GDI-DE test suite tests were removed from hale»connect.

Fixes

- Some unnecessary error messages that occur if a user does not have sufficient privileges to access certain information have been removed.

- An error that caused status messages to be displayed incorrectly on the dataset overview page was fixed.

- An error was fixed to prevent password protection on services after the password was removed.

- File names of uploaded shapefiles can start with a number.

- Fixed a bug with downloading files that need to be converted before adding to a dataset.

- The automatic process that fills metadata now waits for any outstanding processes to calculate the attribute coverage, to be able to access the results.

- Services of datasets in a dataset series no longer count for the capacity points (only the services of the series itself).

- An error that deleted uploaded files that were not associated with a dataset (e.g. uploaded logos for organizations)was fixed.

- Several "priority dataset" keywords are now correctly represented in the metadata when published.

- When using the hybrid mode, no geometries were saved if no geometry was referenced in the SLD. This has been fixed by now always trying to identify a standard geometry in a feature type.

- The Mapproxy cache for raster layers in a series no longer resets every time the series is changed. This now only happens when changes are made to the relevant individual data set.

- An error has been corrected which caused the WFS to deliver invalid XML with missing namespace definitions. This also affected corresponding

GetFeatureInfoqueries. GetFeatureInforequests now return complete XML when theINFO_FORMATparameter is of typetext/xml.GetFeatureInforequests return results for raster/vector datasets.- An issue was fixed that was related to schema location when the same schema definition file is referenced directly in a combined schema and imported by a schema contained in the same combined schema file.

- *The

AuthorityUrl.nameelement can now only contain valid values for the data type NMTOKEN. - Added redirection handling for INSPIRE schemas.

- A fix to prevent global capacity points updates from running during the day was implemented.

- A fix was implemented to use string representations of number values as autofill results, when available.

Information for Systems Administrators

Mapproxy: Adjustments to the Docker Image

Until now, Mapproxy could become a bottleneck when processing WMS requests, as many parallel requests could only be processed poorly in the previous configuration. The runtime environment in which Mapproxy runs in the Docker container has been adapted, as well as the procedure for deleting caches. The result is that Mapproxy no longer acts as the root user within the container - the caches created are assigned to the root user. To ensure access to the caches created, the rights must be adapted so that the mapproxy user of the container has write and read rights. This can be done, for example, via a shell in the new mapproxy container: chown -R mapproxy: mapproxy / mapproxy / cache /

Note: As an alternative, there is also the possibility to keep Mapproxy running as root, but this should only be used as an interim solution - if you are interested, we can provide the appropriate configuration option.

Mapproxy: Extended configuration options

Mapproxy acts as a buffer in the system that intercepts GetMap requests to view services and, if possible, serves them from the built-up cache. It thereby determines what kind of requests are processed by deegree. The behavior of Mapproxy can now be adjusted in some aspects. The configuration options are currently only available at the system configuration level, with the exception of the setting to restrict the metadata to the bounding box.

Important: Changes to the configuration are not automatically applied to existing publications. The new actions on the debug page of the service-publisher should be used for this purpose:

- To update the mapproxy configuration only:

- "Update mapproxy configuration for all publications" for all existing publications

- "update-mapproxy" for a single publication

- To update the mapproxy configuration and to reset the cache (e.g. when changing the cache backend)

- "Update mapproxy configuration and clear cache for all publications" for all existing publications

- "reset-mapproxy" for a single publication

The new configuration options are described below. More information on the individual options can also be found in the Mapproxy documentation.

Reduced re-start times of unresponsive WMS/WFS services

With many publications, the initialization of the OWS services can take a long time. If the feature toggle to divide the configuration workspace into sub-workspaces per organization is used, the configurations are initialized in parallel. This significantly accelerates the start of WMS/WFS services after a failure.

Before / after examples from our systems:

Before: approx. 5 minutes - after: approx. 90 seconds (10k+ Services)

Before: between 30 and 50 minutes - after: between 5 and 8 minutes (60k+ Services)

If you are not yet using sub-workspaces in your deployment and are interested in it, please contact us. Start up time only improves significantly if the publications in the system are well distributed among different organizations.

Cache backend

By default, Mapproxy saves the individual cached tiles as individual files in a specific directory structure. This can quickly lead to millions of files being used for a cache. This, in turn, can be a problem if the file system's limitations on the maximum number of files (or inodes) are reached. Once the limit has been reached and no more files can be created, it is particularly critical if data other than the caches are on the same file system. It is now possible to adapt the backend used for the caches. The options are as follows:

- file - the standard setting with storage as individual files

- sqlite - Saving a zoom level in a SQLite file

- geopackage - Saving a zoom level in a geopackage file

Recommendation: We recommend using the sqlite backend, which we are already using productively. You should check whether the number of files in the file system could possibly become a problem (e.g. with df -i). Currently, we do not support any mechanism to migrate caches between different backends. In this respect, the old cache should be deleted when updating the configuration for existing publications. In principle, however, there is a tool at Mapproxy with which a migration can be carried out.

Restriction of the cache to certain zoom levels

In hale»connect, Mapproxy uses a uniform tile grid for all publications based on EPSG: 3857:

GLOBAL_WEBMERCATOR:

Configuration:

bbox*: [-20037508.342789244, -20037508.342789244, 20037508.342789244, 20037508.342789244]

origin: 'nw'

srs: 'EPSG:3857'

tile_size*: [256, 256]

Levels: Resolutions, # x * y = total tiles

00: 156543.03392804097, # 1 * 1 = 1

01: 78271.51696402048, # 2 * 2 = 4

02: 39135.75848201024, # 4 * 4 = 16

03: 19567.87924100512, # 8 * 8 = 64

04: 9783.93962050256, # 16 * 16 = 256

05: 4891.96981025128, # 32 * 32 = 1024

06: 2445.98490512564, # 64 * 64 = 4096

07: 1222.99245256282, # 128 * 128 = 16384

08: 611.49622628141, # 256 * 256 = 65536

09: 305.748113140705, # 512 * 512 = 262144

10: 152.8740565703525, # 1024 * 1024 = 1.05M

11: 76.43702828517625, # 2048 * 2048 = 4.19M

12: 38.21851414258813, # 4096 * 4096 = 16.78M

13: 19.109257071294063, # 8192 * 8192 = 67.11M

14: 9.554628535647032, # 16384 * 16384 = 268.44M

15: 4.777314267823516, # 32768 * 32768 = 1073.74M

16: 2.388657133911758, # 65536 * 65536 = 4294.97M

17: 1.194328566955879, # 131072 * 131072 = 17179.87M

18: 0.5971642834779395, # 262144 * 262144 = 68719.48M

19: 0.29858214173896974, # 524288 * 524288 = 274877.91MMapproxy can now be configured not to cache tiles from a certain zoom level but always to make requests to deegree:

service_publisher:

map_proxy:

# Don't cache but use direct access beginning with the given level

# (negative value to disable)

# For example: A value of 18 mean levels 0-17 are cached but levels >=18 not

use_direct_from_level: -1Restricting queries and cache to the bounding box of the metadata

Since the data of a view service rarely covers the whole world, it makes sense to spatially limit the cache and the requests to deegree. Now there is the possibility to do this with the help of the bounding box of the metadata. When activated, requests that are outside of the system automatically deliver an empty image without making a request to deegree or the cache having to be expanded to include the information. In addition to activating the restriction, a buffer can also be configured around the bounding box to avoid content being cut off (which can be possible, for example, with raster data):

map_proxy:

# limit mapproxy cache and source requests to metadata bounding box

# otherwise the cache may encompass the whole world-wide grid (see above)

coverage:

enabled: true

buffer: 0.01 # buffer for WGS 84 bounding box (to for instance compensate rasters that exceed the vector bounding box); 0.01 ~ 1kmMonitoring: Alerts on file systems

The existing alerts on file systems, which should provide information when a file system is almost full or no more handles are available, have unfortunately not been fully functional due to a change in the names of the metrics. These alerts have been revised and expanded to determine when the threshold of a file system’s maximum number of files is close to being met. The standard value of the limit is 10% available memory / files, but can be adjusted:

alerts:

filesystem:

# default limit in percent of available space / inodes, must be an integer value available_limit: 10