Guessing Better: Embracing uncertainty in (forest) decisions

Forests are messy, models are wrong.

Numerical models are amazing. They are clean, logical, and reassuringly mathematical. But as George Box reminds us, “all Models are wrong, but some are useful”. This is especially true for dynamic, ecological systems such as forests.

Forest development is difficult to predict. When the conditions are right, trees grow, but when storms and drought hit, they may die. What exactly will happen is highly uncertain.

“The judicious application of sensitivity analysis techniques appears to be the key ingredient needed to draw out the maximum capabilities of mathematical modelling” says H. Rabitz. Uncertainty isn’t just a byproduct of imperfect data or incomplete knowledge, it’s a fundamental part of how the world works. Especially when we try to model the future of ecosystems under changing climates, shifting stakeholder values, and evolving policy landscapes.

Here’s the twist: uncertainty is not our enemy. If used properly, it becomes a powerful tool, one that helps us make better, more robust decisions.

This is exactly where sensitivity analysis steps in. Instead of clinging to a single “most likely” future, as it is often done in weather forecasts, we take many possible futures into account. We let the uncertainties speak, quantify their effects, and listen carefully to what they reveal.

This enables us to make resilient choices in the face of what we don’t (and can’t) fully know. This isn't just about forests. It's about making better decisions.

Where uncertainty comes from

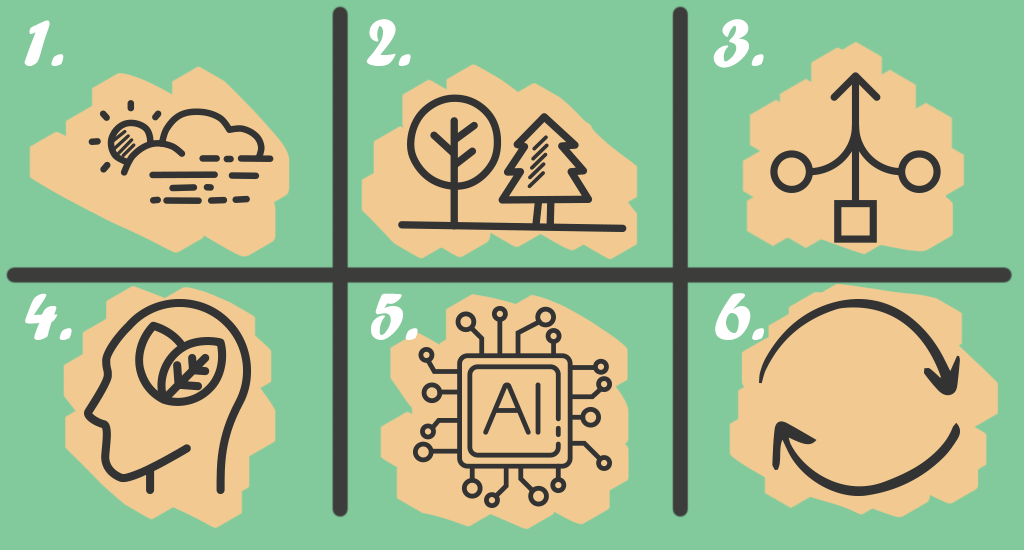

So, where does all this uncertainty in forest decision-making come from?

Let’s break it down:

Model uncertainty: The forest is not a formula

Every forest model is a simplified version of reality. It captures key processes but always under assumptions: how trees grow, how they compete, how they respond to disturbances such as drought, fire, or pathogens. Even the best models can’t capture every nuance. And that’s okay.Preference uncertainty: People don’t always agree

Forests aren’t managed by machines, they're managed by people. Foresters, policymakers, citizens, stakeholders, each with their own ideas of what matters most. Biodiversity? Timber yield? Carbon sequestration? Recreation? This leads to preference uncertainty, where the weights assigned to decision criteria vary. Some might care deeply about economic return, others might prioritize ecosystem health.Climate scenario uncertainty: Choose your future

Forecasting the climate of 2080 is a bit like predicting the ending of a long, complicated novel. We don’t know whether emissions will follow a moderate path (maybe like the RCP 4.5 scenario?) or a high-impact one (RCP 8.5). We also don’t know what policies or technologies will exist by then. This is due to both aleatory uncertainty (natural randomness) and epistemic uncertainty (lack of knowledge). Instead of pretending to know, we can simulate across multiple plausible climate futures, and analyze how our forest strategies perform in each.Spatial and aggregation uncertainty: Zoom out, things get blurry

When you move from single trees and small forest stands to larger management units or even entire regions, new uncertainties emerge. The resolution of your data, the way you group areas, and how you average things out all affect your results. Maybe species diversity looks great when measured at the stand level, but drops off when aggregated. Or maybe management impacts are visible only at a finer spatial scale. Either way, spatial uncertainty reminds us that scale matters.AI-derived input uncertainty: Don’t let machine learning blind you

Modern forest models often rely on inputs generated by AI models: tree species maps from satellite imagery, health indices from drone data, biomass estimates from LiDAR. While AI can work wonders, it’s not infallible.

This adds a new layer of data-driven uncertainty, which comes as:

- Classification errors: That spruce stand? The model says “pine”. Oops.

- Model generalization: An AI trained in Bavaria might not behave well in the Black Forest.

- Domain shift: The model learned from summer leaf-on images… and now it's analyzing cloudy autumn scenes.

We can deal with this by treating AI outputs not as single values, but as distributions, just like any other uncertain input. For example, instead of saying “this pixel is 100% spruce”, we might say “70% spruce, 20% pine, 10% unknown”, and propagate that uncertainty forward into the simulation chain.

Meet ProMCDA: the decision core of an “uncertain” forest planning system

You might be wondering, is this approach of explicitly working with uncertainties just theoretical?good news! Most of it is already alive and kicking thanks to ProMCDA.

ProMCDA is a modular, open-source decision analysis framework tailored for multi-criteria decision analysis (MCDA) in complex, data-rich domains like forest management, available here.

It already tackles two crucial sources of uncertainty:

Model uncertainty

It supports probabilistic modeling of input parameters: no need to pretend we know exact values when we clearly don’t.Preference uncertainty

Rather than locking in one set of stakeholder weights, ProMCDA runs Monte-Carlo simulations across many combinations reflecting diverse perspectives and ambiguity in priorities.

Through these features, ProMCDA already enables probabilistic rankings, rank acceptability indices, and stability analyses. This helps decision-makers see not just what performs best, but how reliable that performance is.

What follows is the natural next step: integrating additional uncertainty layers, such as climate, AI-derived data, and spatial aggregation into this probabilistic framework.

Mastering variability: a multi-level Monte-Carlo approach

Now that we have met the many faces of uncertainty, it is time to putting them all together into a coherent system that doesn’t just tolerate uncertainty, but thrives on it.

This is a Multi-level Monte-Carlo approach:

We simulate thousands of alternate realities. In each one, we tweak the climate scenario, the forest model parameters, the AI-derived inputs, the stakeholder preferences, and if needed even the way we aggregate spatial units. Based on what happens in the majority of these realities, we can tell which forest strategy comes out on top. If a particular strategy keeps performing well across all those variations, it’s probably a solid choice. Not perfect. Not certain. But robust.

Here’s how it works in practice:

The Simulation Workflow (a.k.a. the recipe for robustness)

Pick a climate scenario

Select one from a set of plausible climate futures, usually based on the RCP4.5, RCP6.0, or RCP8.5 scenarios.Simulate the vegetation model

Feed in the climate data and simulate forest dynamics by incorporating uncertainties in growth rates, species responses, and other parameters.Aggregate spatial units (optional)

Want to see how things look at the landscape level? Try different aggregation schemes and see how the results shift.Sample stakeholder preferences

Generate random sets of weights for your decision criteria (like biodiversity vs. timber yield), and run the ProMCDA evaluation with weight uncertainty.Include AI input variability

Don’t forget those remote sensing inputs. Instead of taking AI predictions at face value, treat them as probabilistic and draw samples accordingly.Repeat 10,000 times

Run the whole thing over and over again. Each simulation is one possible future. Collect them all and run the ProMCDA evaluation with indicator uncertainty.

What do we get from all this?

Lots of results, which all provide insight.

Here are a few of the powerful decision metrics this approach gives us:

Robustness Scores

Want to know how often a particular strategy comes out on top? We track the frequency with which each option ranks first. The higher the number, the more consistently that strategy performs across all the uncertainty we throw at it.

Score Distributions

Instead of saying “strategy A scores 87 points,” we show the range of scores it gets across simulations. This reveals the full spectrum of possible outcomes, not just a single number.

Sensitivity Analyses

How sensitive is your result to different factors? Preference weights? Climate scenarios? AI input uncertainty? We quantify it so you can see what really drives the decision, and what’s just background noise.

We also tap into the rich world of probabilistic decision metrics, like:

- Rank Acceptability Index (RAI): This measures how likely a strategy is to appear in each rank position. It’s like asking: “How often does this option land in the top 3?”

- Stability Intervals (SI): These tell us how much you can tweak the preferences (like stakeholder weights) before a strategy drops in ranking. Wider intervals mean more stable, which means less risky.

- Regret Metrics: Ever picked a strategy and later thought “ugh, if only I had known”? Regret analysis simulates this by comparing what you chose to what would’ve been best in hindsight. The lower the average regret, the safer the bet.

These tools don’t give us a perfect answer. But they do give us something better: a well-informed, risk-aware basis for action.

Towards a smarter and friendly Forest DSS

When you put all of this together, what emerges is the blueprint for a next-generation Forest Decision Support System (FDSS). One that doesn’t just crunch numbers, but helps decision-makers explore complexity and navigate trade-offs with confidence. That system is a modular, spatial, interactive environment that lets users explore outcomes in context. It’s a future where decision support isn’t just technically sound, but also intuitively accessible, even when reality is messy.

In forest management, where planning horizons stretch decades into the future, and choices affect ecosystems, economies, and communities, pretending we know everything is far more dangerous than admitting we don’t. That’s what a probabilistic, uncertainty-aware decision system offers: no rigid answers, but flexible insights. A way to navigate the forest of possibilities, not by cutting a straight line through it, but by learning how to read the signs, follow the paths, and choose with confidence even when the future is fuzzy.

In the end, the smartest decision isn’t the one that’s “right”, it’s the one that holds up no matter what comes next.