Measuring Data Model Quality

wetransform develops a data-driven approach to the design of data models. We do this because we believe this will help in the faster development of higher-quality shared specifications, with lower risks in implementation.

In this data-driven approach, we aim to improve the quality of a data model with every iteration. This implies the question what kind of data and analysis we can use to measure quality of a data model. In this article, we’ll share a bit of the reasoning behind our approach.

First of all, when we say data-driven, we mean four kinds of data:

- Data that can be derived from the model itself by static analysis

- Data that can be derived from vertically mapping the model to various implementation platforms

- Data that can be derived from comparison to other models

- Data that can be derived from usage of the data model

Let’s dip into each of these.

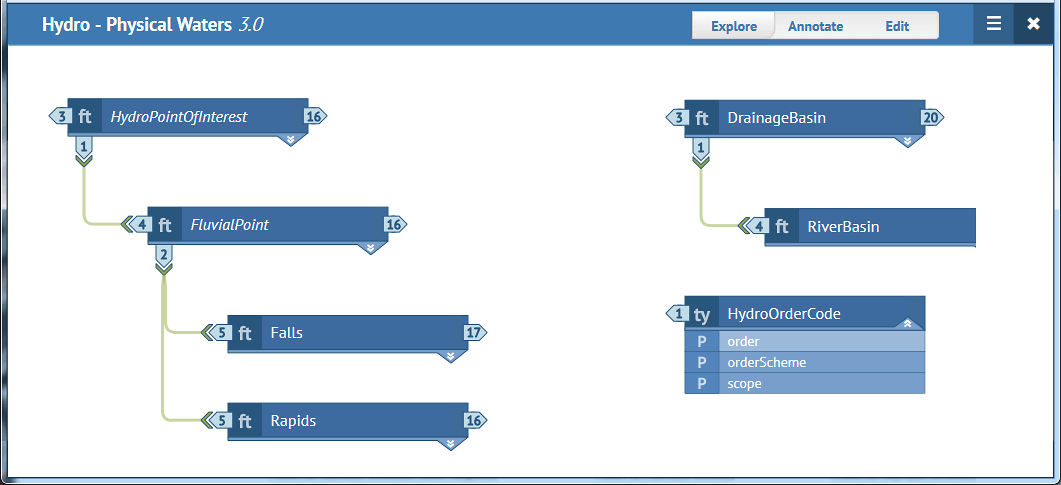

Static analysis of relational models and of object models has been around for a long time. There is some interesting research & development work like SDMetrics and UML Metrics Producer, but most of the ideas haven’t made it into typical design processes – when compared to JSLint or other code analysers that are part of most build processes nowadays. The measures created in static analysis focus on counting types and properties to assess size and to identify loops and nesting depths to calculate structural complexity. They are especially helpful when dealing with transient complexity. In these cases, the model currently under design might seem simple, but it imports other models that contribute greatly – and in an opaque way – to the overall complexity of the model. Some tools also look into behavioral complexity by analyzing the number and structure of the messages exchanged between objects in a model. Finally, there are solutions that can identify design patterns.

Vertical mapping is the process of transforming a conceptual model to logical models in various implementation platforms. It includes mapping a UML model to an XML schema or a relational database schema, or mapping an Ontology to an RDF schema. We measure properties of the vertical mapping to determine how well suited a conceptual model is for implementation on various platforms. Consider the following example: A complex conceptual model like the INSPIRE Data Specifications can be mapped well to XML, but it’s rather hard to map effectively to an Esri Geodatabase system.

Comparative analysis helps find out whether there are similar models, and tells us how the metrics gained from vertical mapping analysis and static analysis stack up against each other. To identify similar models, we abstract them to graphs and then compare structures, value types and labels. After identifying similar models, we assess the model under design by seeing where it falls in its cohort: Is it by far the most complex model? Is it very small in comparison? Or is it highly connected to other models?

Usage analysis is core to understanding the quality of a model. It encompasses several different types of measures:

- Effectiveness of the model: How large and complex is an actual instance of an object graph? How efficient can the instance be created and parsed?

- Coverage of the model: How much of the model is actually used? Are there hot spots in usage? Are there points where the model is not differentiated enough?

- Usage: Which parts of the actual instances are actually consumed by up-stream applications? Is there data in the model that is never used?

We do not create more abstract joint scores from these individual metrics. The designers have to look at each value – most of them unitless – and decide what goal they want to reach for in their next iteration – more effective storage in relational database systems? Less model excess? They can then apply the modification and see what the result is both in the primary metric, but also in all the other metrics.

Stay tuned for further updates on agile, data-driven model design!